Boosting Jamstack Site Performance With Edge Functions

How can the edge help boost your sites' performance?

Hey folks! Hope everyone has been having a good weekend so far. It was great seeing so many folks at Jamstack Conf last week, and to hear about all the cool projects and companies that are being built in the Jamstack space right now.

For those who weren’t able to tune in to watch the livestream, don’t worry, I’m sure the recordings will be uploaded relatively soon (pretty sure they’re going to appear on the Jamstack Conf Youtube channel).

In the meantime, if you were interested in catching my talk on “Boosting Jamstack Site Performance with Edge Functions”, here’s the talk in blog post form.

Enjoy!

A lot of the marketing around Edge functions has to do with how it helps address issues of performance bottlenecks and high latencies for users interacting with your site from certain parts of the world.

But in order to understand how edge functions help address these issues, we first need to take a step back and understand what is the ‘edge’ in ‘edge functions’.

To do that, we first need to quickly talk about data centre organization on cloud providers like AWS, Google Cloud platform, or Microsoft Azure.

How are data centres organized, and what is the ‘edge’?

At the highest level of scope, data centres are organized by ‘region’ which are your “US-West-1” or “Canada-Central” options when you’re looking to initially get started with a hosting location for your website. Within those regions are ‘availability zones’, also known as ‘AZs’. Each AZ contains one or more data centres, and when we talk about ‘origin servers’, we’re commonly talking about servers located within one of these data centres.

Knowing that, the ‘edge’ refers to data centres that live outside of an AZ, with an ‘edge function’ being a function that is run on one of these data centres. Depending on the cloud provider of your choice, you might also see the term ‘point of presence’ (a.k.a. POP) or ‘edge location’ to refer to these data centres.

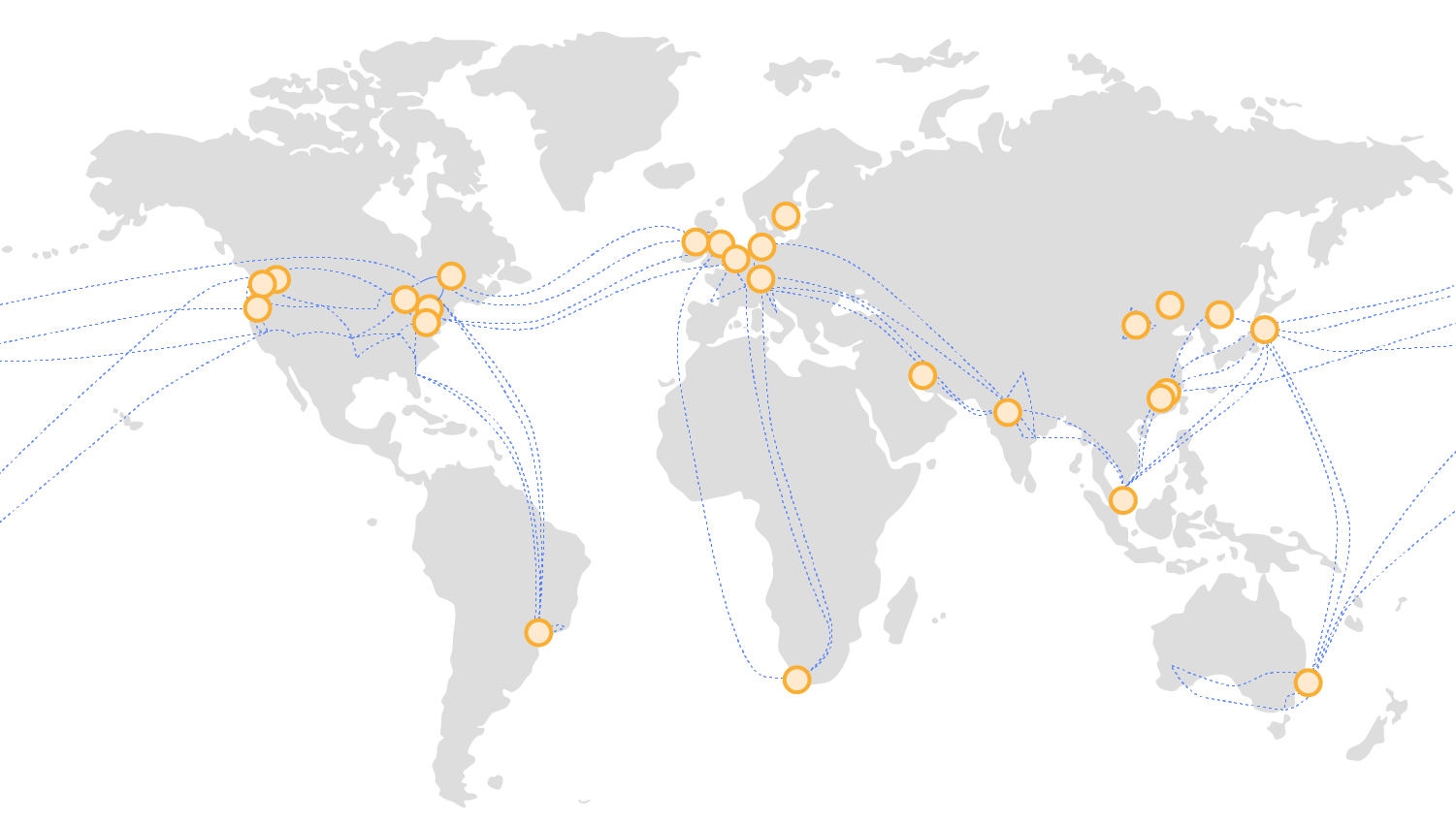

To give a sense of how many more edge locations there are in the world relative to AZs, let’s look at a couple of maps. Below is an image taken from AWS' documentation, which shows all of the AZs in their network across the globe:

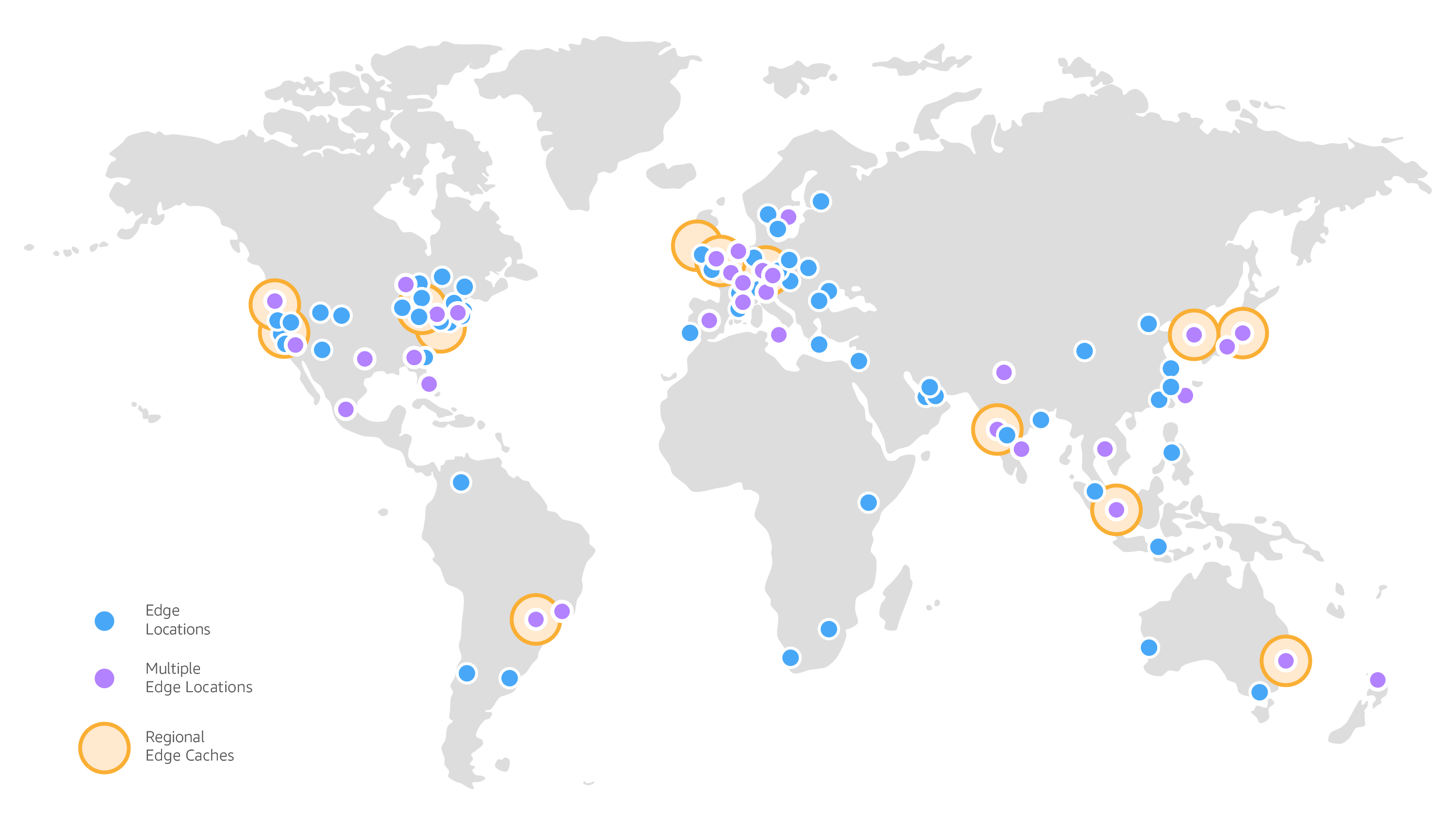

In the next image, this shows all of the edge locations within the AWS network:

You can immediately see at a glance that if you’re able to handle at least the most highly requested pages on your site - but ideally you would want to be able to handle all of them - you can significantly improve the performance of your site as experienced by your users.

This is due to the lower latency incurred from receiving and fulfilling the request at a location physically closer to the user making the request, and would be particularly notable in regions with one AZ such as South America, Africa, and Australia.

When and how is a request handled at the edge?

Now that we know what the ‘edge’ is, let’s take a look at how and when a request is handled at one of these edge locations.

Below is a very simple diagram of a request path:

To start with, a client makes a request to a page on your website, and the edge location will be the first place where the request starts to be handled.

The best case scenario here is that the edge location is able to fulfill the request in its entirety, and send a response back to the client. But let’s say for a moment that it can’t for whatever reason. Perhaps it needs more information than it has available to it, or a particular service within the cloud provider needs to be used that can’t be accessed from the edge function.

In this case, the edge location will make a request to the origin server where the request will be fulfilled, and a response sent back from the origin server to the edge location. At this point, the edge location has the option to cache the response from the origin server before sending a response back to the client. The caching options available vary by vendor, so doing further research here is highly recommended.

The ability to cache at the edge can lead to huge performance gains and simpler site architectures, as we’ll see in a moment as we take a look at a case study of edge functions in a Jamstack site by transitioning a server-side rendered page into a static one.

Use case: Transitioning a server-side rendered page (SSR) into a static page

Why would we want to do this in the first place? The main reason is that a SSR page is rendered on every request by the origin server, which leads to two improvements if this transition to a static page were done:

- A static page can be cached and served on the Content Delivery Network (CDN), a SSR page cannot.

By having the page be hosted on the CDN, it can be accessed much more quickly when fulfilling a request compared to a SSR page.

- The load on the origin server is removed as it no longer is responsible for fulfilling all of those requests.

If the page that is being converted into a static page is a highly requested one, the page being served on the CDN reduces the load on the origin server, and will help reduce the cost and performance bottlenecks associated with serving the page as a SSR one.

Use case overview

To set the scene for our use case - let’s imagine for a moment that I have a thriving ecommerce business with users based all over the world, and I have a page that’s the same for all users with the exception of a banner that I show at the top.

The banner contains different messaging depending on where they’re located. At times it might be a sale I’m promoting in a country or city, other times it might be a GDPR notice or a simple ‘welcome’ message.

Let’s take a look at what this Next.js site could look like to achieve this behaviour, solving this both with SSR and with edge functions and a static page.

SSR version

// middleware.ts

import { NextRequest, NextResponse } from 'next/server'

export function middleware(req: NextRequest, response: NextResponse) {

const {geo} = req

const country = geo?.country || ''

return NextResponse.rewrite(new URL(`/?country=${country}`, req.url))

}

// MyECommercePage.tsx

import React from "react"

export default function MyEcommercePage({bannerMessage}) {

return (

<div id="container">

<div id="banner" style={{border:"solid", padding:"16px"}}>{bannerMessage}</div>

<div id="content">Common content would be here</div>

</div>

)

}

export async function getServerSideProps({ query }) {

const userCountry = query.country

let bannerMessage

if (userCountry === 'CA') {

bannerMessage = "Hello Canada! Use promo code CANADA50 for 50% off your order!"

} else {

bannerMessage = "Hello world!"

}

return { props: { bannerMessage } }

}

To give an overview of what you’re seeing above, when a user makes a request to see MyEcommercePage, the following happens:

- the

middlewarelocated on the origin server begins to handle the request - the country that the user is located in is determined from the

req.geoobject, and the value added to the URL before rewriting the request - the page uses the

countryin the query parameters to determine what the value of thebannerMessageprop should be - the HTML of the page is rendered using the value of the prop that is passed in, and the response is set back to the user

As mentioned above, the downside to this approach is that the origin server needs to run on every one of these requests to build the HTML to send back to the user, and for a highly requested page that can lead to a lot of load on the server, and higher costs associated with serving that page.

You could get around this by making static pages for each region that you have a larger user base in and rewrite or redirect the user to those pages based on the value of the country in the middleware. The downside to this approach is that it can lead to a lot of duplicated logic that can easily lead to inconsistencies in your site.

Given that, let’s take a look at our static page and edge functions example.

Static and edge functions

// middleware.tsx

import { NextRequest } from 'next/server'

import { MiddlewareRequest } from '@netlify/next'

export async function middleware(req: NextRequest) {

const {geo} = req

const country = geo?.country || ''

const request = new MiddlewareRequest(req)

const response = await request.next()

if (country === 'CA') {

const message = 'Hello Canada! Use promo code CANADA50 for 50% off your order!'

response.replaceText('#banner', message)

response.setPageProp('banner', message)

}

return response

}

// MyECommercePage.tsx

import React from "react"

export async function getStaticProps() {

return {

props: {

banner: "Hello world!"

}

}

}

export default function MyEcommercePage({banner}) {

return (

<div id="container">

<div id="banner" style={{border:"solid", padding:"16px"}}>{banner}</div>

<div id="content">Common content would be here</div>

</div>

)

}

Again, to give an overview of what’s happening in the code above:

- the

middleware, now located on an edge location rather than the origin server, begins to handle the request - the country that the user is located in is once again determined from the

req.geoobject - the

reqis wrapped with Netlify’s Advanced Next.js Middleware - a request is made to the origin, though in this case, because the page being requested is static, this is served by the CDN rather than handled by the origin server itself. It should be noted that at this point in the code, the

responsecontains HTML for the banner that says ‘Hello world! - based on value of

country, we’re updating the HTML and page prop before returning the response to the user. Now, if the user is based in Canada, the HTML and page prop would have the order discount code message that we’d want to show Canadian customers

By performing localization logic like this on the edge, we also have the option to cache this localized response at that edge location.

This means that other users making requests for that page that are highly likely to want the same version of that page will benefit from significantly reduced latency incurred from fulfilling the request by having that cached response physically closer to where they are.

Edge vs Origin functions?

So how do edge functions compare to the standard serverless functions that we’re generally familiar with?

Edge functions, compared to standard serverless functions:

- are expected to run often, potentially on every request

Serving localized content such as in the example above is one such use case for edge functions, but other common use cases include authenticating users and A/B split testing.

- have lower CPU time available to them

In order to ensure that these edge functions are performant given how frequently they are being run, cloud providers impose a limitation of lower CPU time on them. In practical terms, this means that you can do less operations within that function compared to an origin function.

This limitation varies between vendors and within the product offerings they have, so it’s worth investigating this further to see if your cloud provider has an acceptable limit for your use case.

- have limited integration with other cloud services

To use AWS as an example, they advertise about 200 AWS services that integrate with their standard Lambda offering. The number of AWS services that integrate with edge functions is much lower, and worth noting in case you may have a use case that might be prevented from transitioning to an edge function due to needing an integration with another service within your cloud provider’s product offering.

Conclusion

We’ve just touched the tip of the iceberg in terms of edge capabilities by looking at a simple use case of serving localized content that went from being served by the origin server to being served on the CDN and at the edge.

We’re starting to see the rise in popularity of ‘edge first’ frameworks like Fresh or frameworks that support edge like Remix where handling requests at the edge is increasingly becoming the preferred default rather than handling them at a potentially very distant origin server.

An ‘edge first’ future is exciting, because the more requests that we can serve closer to our users, the better the experience of our sites will be regardless of where our users are based in the world.

And in Jamstack sites, edge functions play a big part in moving towards that future.

Like what you've read?

Subscribe to receive the latest updates in your inbox.