Brag sheets, AI at the edge, and free tier economics

While my summers are usually filled with BBQs, camping trips, and seeing some of the festivals in my city, this summer needed a bit of time dedicated to something that I haven't done in a while: interviewing for a new role.

I'm fortunate in that I have a fantastic network of folks that I've met throughout my career thus far who have been incredibly generous in referring me to roles at their companies or sending job postings my way when they see them in their network. I can't thank all of them enough for their help.

During this process, one of the things I found challenging, especially early on, was the need to get into details about the technical decisions and trade-offs made in previous projects that I worked on that I hadn't thought about in years. It's moments like these that I'm glad I've been diligent about maintaining a brag sheet.

If you've never heard about brag sheets, check out an earlier blog post I wrote on creating your very first brag sheet (there's a link to a talk I gave at a conference for this as well if you prefer watching a video to reading).

And if you know what it is and haven't updated yours in a while - this is a friendly reminder to do so while the details of recent work are still fresh in your mind.

You never know when you'll need it and you'll be grateful that you put in the work when you do.

Things I'm musing about

Free tier economics

I've been curious about the economics of free tiers for developer tools after Planetscale (a "database-as-a-service" platform, or DaaS) removed their free tier several months ago citing wanting to focus on profitability. While I'm sure it's relatively straightforward to get a sense of the costs for supporting a free tier, I hadn't made time to do the math myself.

Xata, another DaaS, recently published a post on their blog giving some insight into the economics of their free tier, the benefits of the architecture used to keep their free tier sustainable, and how that architecture helps in larger-scale use cases.

The biggest takeaway from their post, at least for me, is it's not just the raw compute and data storage costs that one needs to think about, but also the underlying architecture of the service. A well-thought-out architecture can help the free tier remain cost-effective and flexible in the face of changing usage patterns or potential "noisy neighbour" problems, particularly when sharing resources across users as is common in free tiers.

Something I'll be thinking about is whether architectures isolating free tier users, especially those that are inactive for longer periods of time, are something that is best considered upfront or if that potentially leads to over-engineering, what the risks to a system and business are if this isn't considered early on, and how painful common problems related to not doing so are to fix in the future.

Edge computing and AI

In preparation for my upcoming talk on edge computing at GOTO Chicago, I'm researching recent developments in that space, with a particular focus on AI.

The questions I had going into my research centred around how distributed AI models evolve and adapt, and how issues in the models are managed and addressed when there is potentially limited connectivity with a central origin server that manages the retraining and deployment of AI models.

At a high level, what I've learned so far is that:

- Edge AI is great for tackling problems that require processing data in real-time without constant reliance on being connected to cloud infrastructure;

- AI models can be run on edge devices with low power, low memory, and low connectivity, such as Internet of Things (IoT) devices;

- AI models running at the edge need to consider using algorithms that factor in the hardware in which they are being run on. If they're being run on IoT devices they won't have the capacity that a powerful, highly available server does; and

- Edge AI differs from "Distributed AI". My understanding so far is that:

- Edge AI = local decision-making on an edge node (whatever that means in the context of the problem domain) with an AI model;

- Distributed AI = collecting data from the edge AI nodes, retraining models based on the data collected and analysis performed, and deploying updated models to any edge AI nodes that require them.

For those interested in digging a bit further, here's a high-level overview and a book that does more of a deep dive:

- "What is Edge AI" on IBM's site

- AI at the Edge by Daniel Situnayake and Jenny Plunkett

Fun things I've been up to

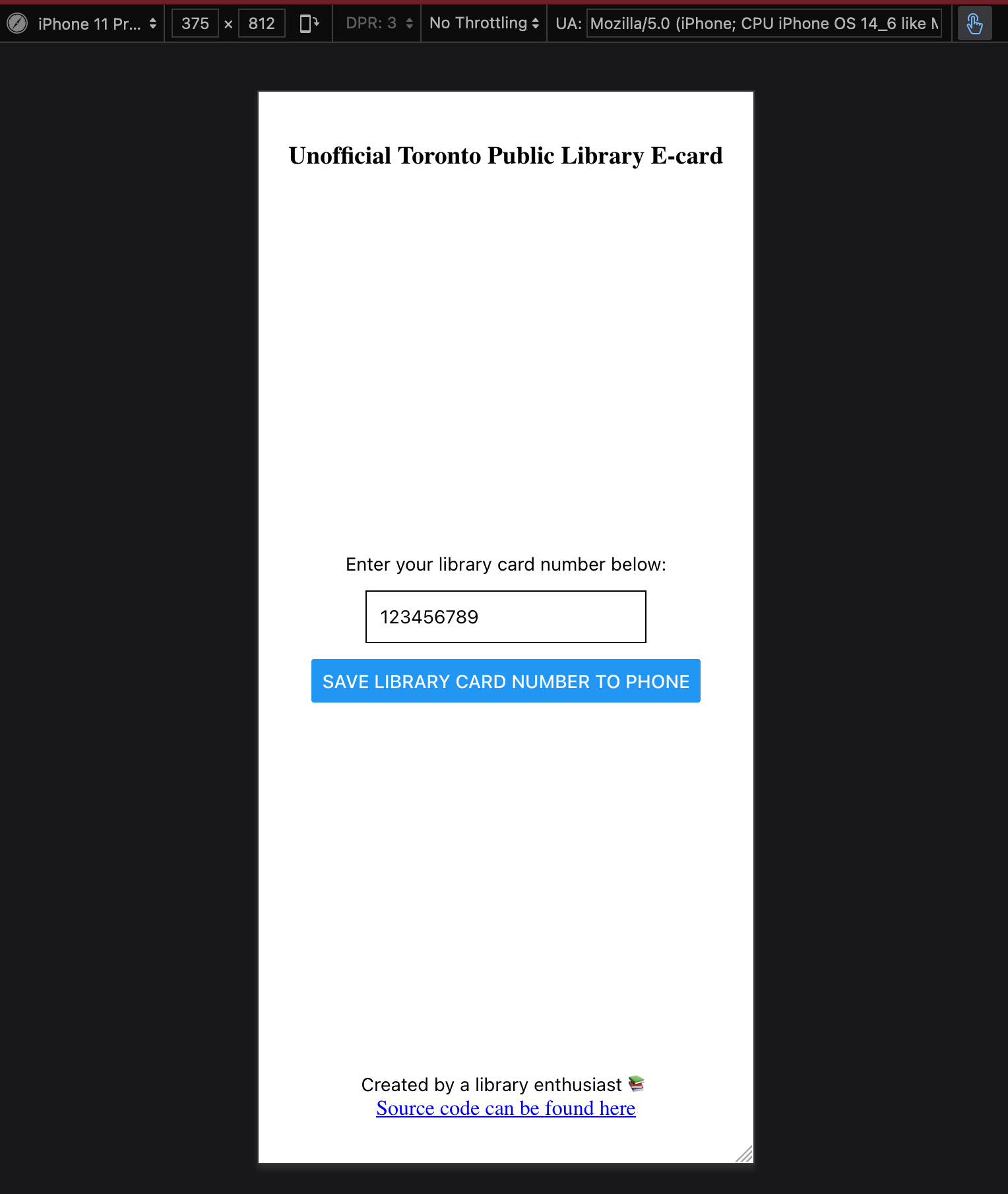

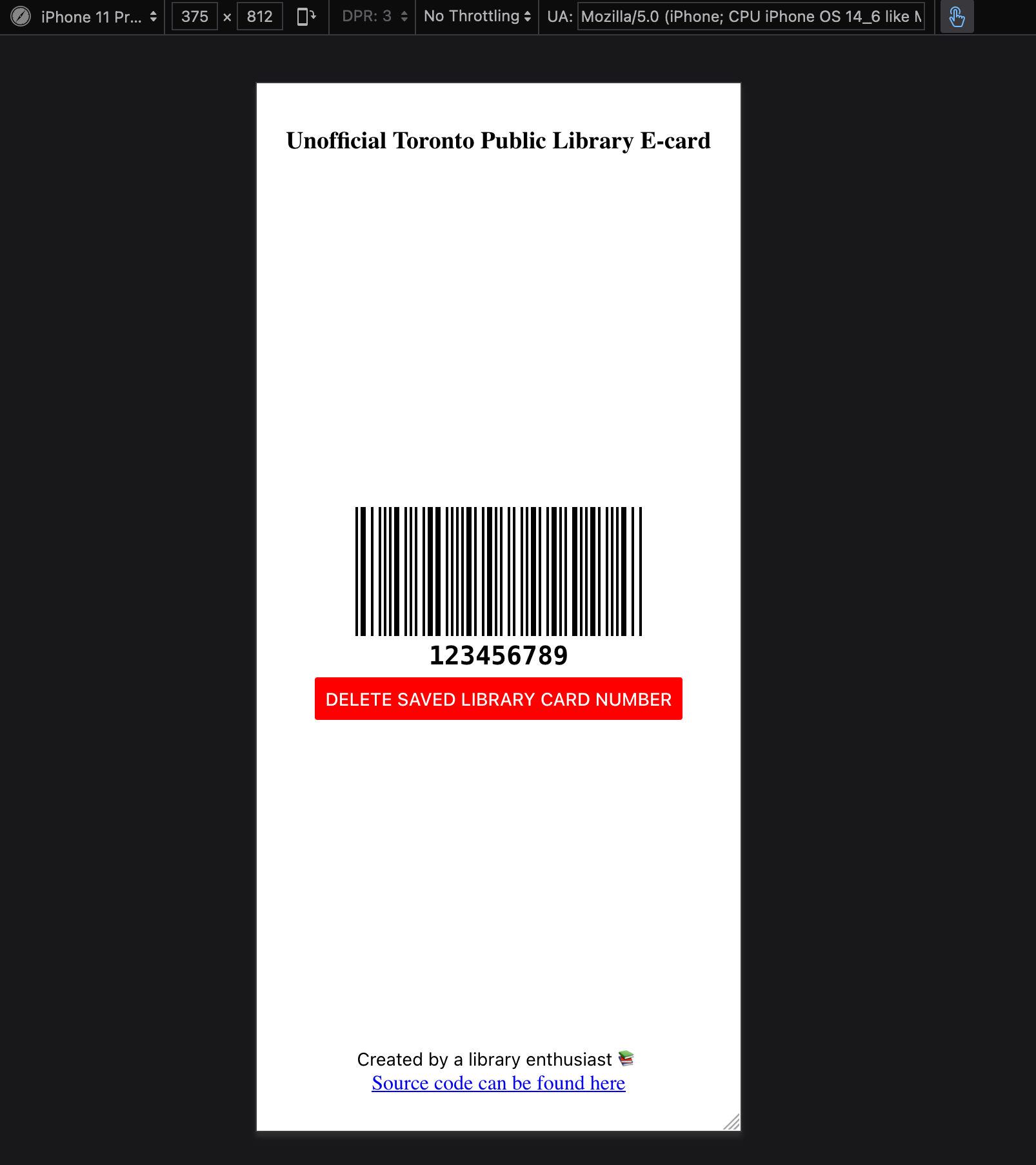

Toronto Public Library (TPL) e-card project

I finished it! 🎉🙌

It's the first side project (outside of conference talks) that I've done in a while so I'm mostly just proud of myself for sticking with it despite the interruption caused by the cybersecurity incident that TPL had from October 2023 - March 2024.

It doesn't look the prettiest (one of these days I'll learn some design basics 😅), but I can confirm it works!

It's deployed here and the source code (which is published to the public domain so anyone can use it freely) can be found here if you'd like to poke around. Feel free to even fork the project and repurpose it for your own public library!

Some of the details about the project, including some of the things I've learned in the process, can be found in a post I wrote last month.

Since that post, I've learned a bit more about what setting up service workers is like today.

I wanted to have service workers as part of this project because I wanted it to be possible for the ecard barcode to be loaded even if the device was offline.

Several years ago (~2016/2017), I remember trying to get started with service workers and found the configuration a bit tricky to get right. Since then, Google has provided a CLI tool that made setting this up significantly easier.

So if you're thinking of using service workers in your web/mobile project, take a look at that CLI tool, it'll make your life a lot easier.

Like what you've read?

Subscribe to receive the latest updates in your inbox.